From basement labs to pro studios, a silent revolution is reshaping the sound industry.

Why This Movement Is Exploding

In the past, audio engineering knowledge was guarded like a trade secret. Access to schematics, pro-grade tools, and DSP knowledge was either prohibitively expensive or tightly controlled by corporations and institutions.

Today? That’s over.

We’re living in a time when:

- High-performance hardware is cheap and modular.

- Open-source frameworks are mature and production-ready.

- Communities are collaborating globally — sharing code, circuits, and IRs (impulse responses) faster than any manufacturer can patent them.

It’s not just a trend. It’s a seismic shift — from consumption to creation, from dependence to engineering literacy.

Let’s look at what’s actually being built, and how.

🛠️ 1. DIY Audio Hardware: Building Your Own Signal Chain

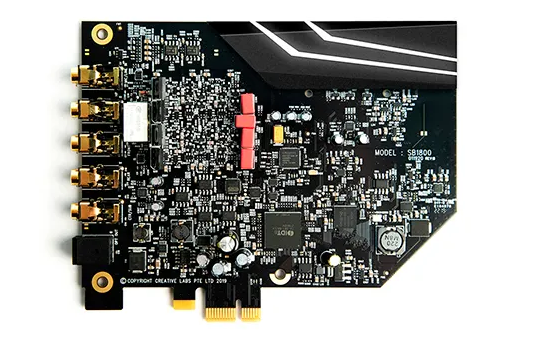

Yes — people are literally building their own audio interfaces, DACs, compressors, preamps, and summing mixers at home.

📦 The Tools:

- Arduino, Raspberry Pi, and STM32 boards for real-time audio control and signal routing.

- DIY-friendly DAC chips (like PCM5102A, AK4493, or ESS Sabre ES9023) with breakout boards.

- Low-noise op-amps (NE5532, OPA2134, etc.) for custom analog stages.

- Open-source PCB design tools like KiCad and EasyEDA for circuit prototyping.

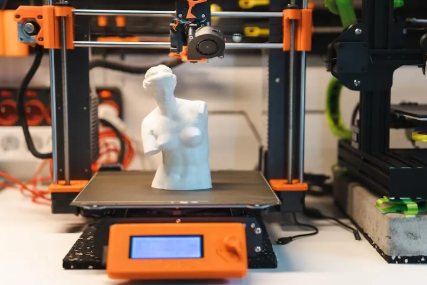

- Enclosures 3D-printed or CNC’d at home.

🧪 Example Projects:

- A fully analog, sidechain-capable stereo compressor based on 1176 schematics.

- A multichannel USB DAC + headphone amp with custom clocking and ultralow jitter.

- A DIY analog saturator using real transformer emulation circuits.

The line between hobbyist and professional is gone. Your “side project” can now outperform legacy gear — if you build it right.

🎛️ 2. Writing Your Own Audio Plugins — No Fancy Degree Required

Want to build your own vintage EQ, limiter, or even a spectral reverb that auto-responds to BPM changes? You can — and people are doing it right now using frameworks like:

⚙️ JUCE (C++ Framework)

- Industry-standard for audio plugin development (VST3, AU, AAX).

- Cross-platform, highly customizable.

- Used by Arturia, Korg, Native Instruments.

🧰 Other Tools:

- Pure Data / Camomile: Visual programming for audio with real-time processing.

- Faust: A functional DSP language that compiles to VST, LV2, standalone, etc.

- Max/MSP + RNBO: From prototyping to plugin exporting.

- SuperCollider: For procedural/generative DSP and synthesis.

DIY engineers are no longer just building tools for themselves. They’re releasing them to the public — some open-source, some commercially — and shifting the industry in the process.

🌀 3. Convolution Reverb From Your Church’s Stairwell (Yes, Really)

Why download a plugin when you can capture your own space?

Impulse response (IR) recording is now so accessible, even semi-pro engineers are creating convolution reverbs from:

- Grand cathedrals

- Tunnels, caves, forests

- Iconic studios

- Phone booths and freezers (yes, seriously)

🧪 How It Works:

- Generate a sine sweep or starter pistol sound.

- Record the reverberated signal in a space using stereo or surround mics.

- Deconvolve the result to create an impulse response file.

- Load it into any convolution reverb plugin (e.g. ReaVerb, Space Designer, Convology, IR1).

Boom — you now have your own reverb plugin, literally shaped by a physical space you stood in.